Santa Clara, California: Nvidia has reaffirmed its dominance in the artificial intelligence sector with the announcement of a groundbreaking lineup of AI GPUs at the Nvidia GPU Technology Conference (GTC) 2025. The new roadmap introduces the Blackwell Ultra GB300, set to launch later this year, followed by the Vera Rubin (2026) and Rubin Ultra (2027), each promising unprecedented performance enhancements and computing power.

Nvidia’s aggressive expansion in AI comes as the company’s data center business surpasses its gaming GPU segment, with staggering profits of $2,300 per second. This seismic shift underscores Nvidia’s growing focus on AI infrastructure and enterprise solutions.

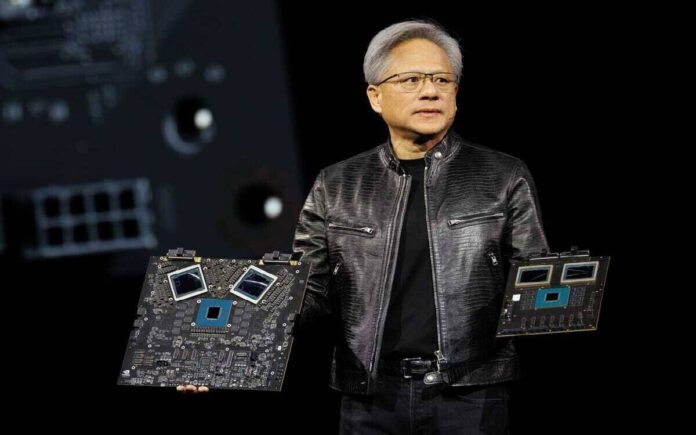

“The industry needs 100 times more than we thought we needed this time last year”, said Nvidia’s founder and CEO, Jensen Huang, emphasizing the insatiable demand for AI processing power.

Blackwell Ultra GB300

A Game-Changing AI UpgradeThe Blackwell Ultra GB300, scheduled for launch in the second half of 2025, serves as an enhanced iteration of the original Blackwell architecture rather than a complete overhaul.

- AI Performance: 20 petaflops of FP4 inference, matching the original Blackwell GPU.

- Memory Expansion: 288GB of HBM3e memory, up from 192GB in previous models.

- Enterprise Cluster: The DGX GB300 “Superpod” will deliver 11.5 exaflops of FP4

computing and 300TB of memory, a significant leap from the 240TB offered by Blackwell.

📣 Announcing #NVIDIABlackwell DGX Personal Computers. #GTC25

— NVIDIA Newsroom (@nvidianewsroom) March 18, 2025

AI developers, researchers, data scientists and students can prototype, fine-tune and inference large models on desktops.

Learn more ➡️ https://t.co/spraN9RBpP pic.twitter.com/UccrhTY3aQ

Vera Rubin & Rubin Ultra: Pioneering the Next Era of AI Processing

Set to debut in 2026 and 2027, respectively, Vera Rubin and Rubin Ultra represent Nvidia’s next major leap in AI architecture.

- Vera Rubin: Offers 50 petaflops of FP4 performance, making it 2.5x faster than Blackwell Ultra.

- Rubin Ultra: A dual-chip design fusing two Rubin GPUs into one powerhouse, boasting 100 petaflops of FP4 performance and 1TB of HBM memory.

- NVL576 Rack System: Delivers 15 exaflops of FP4 inference and 5 exaflops of FP8 training, making it 14 times more powerful than Blackwell Ultra.

These upgrades will drastically enhance AI workloads, with Nvidia claiming that the NVL72 cluster running DeepSeek-R1 671B will generate responses in just 10 seconds, a 10x speed improvement over the 2022 H100 GPU.

Cutting-Edge AI Hardware: DGX Station & NVL72 Rack

For enterprises seeking high-performance AI computing solutions, Nvidia introduced two new hardware innovations:

DGX Station: A high-performance AI workstation featuring:

- A single GB300 Blackwell Ultra GPU.

- 784GB of unified system memory.

- 800Gbps Nvidia networking.

- 20 petaflops of AI performance.

NVL72 Rack: A next-gen AI powerhouse offering:

- 1.1 exaflops of FP4 computing.

- 20TB of HBM memory.

- 14.4TB/sec networking speeds.

Nvidia’s AI Leadership and Vision for the Future

Nvidia’s latest AI innovations come amid reports that the company has already shipped $11 billion worth of Blackwell GPUs in 2025, with top buyers purchasing 1.8 million units to date.

Looking even further ahead, Nvidia’s 2028 GPU architecture has been officially named “Feynman”, after the legendary physicist Richard Feynman, reinforcing Nvidia’s long-term vision for AI and supercomputing innovation.

With these advancements, Nvidia is setting the stage for the next generation of AI breakthroughs, ensuring its leadership in the rapidly evolving landscape of artificial intelligence and high-performance computing.